I suspect you’re no more in the mood this week to read about business strategy, IT strategy, the intersection of business and IT … my usual stuff … than I was to write about it. And I vowed not to talk about anyone named Trump, Pence, Biden, or Harris, on the grounds that the odds of my having anything new to say is vanishingly small.

So I took one from the vault this week, originally published three years ago. For one reason or another it seems fitting this week. Hope you enjoy it.

# # #

The problem with quadrant charts isn’t that they have two axes and four boxes. It’s the magic part — why their contents are what they are.

Well, okay, that’s one of the problems. Another is that once you (you being me, that is) get in the quadrant habit, new ones pop into your head all the time.

Like, for example, this little puppy that came to me while I was watching Kong: Skull Island as my Gogo inflight movie.

It’s a new, Gartnerized test of actorhood. Preposterousness is the vertical axis. Convincing portrayal of a character is the horizontal. In Kong, Samuel L. Jackson, Tom Hiddleston, and John C. Reilly made the upper right. I leave it to KJR’s readers to label the quadrants.

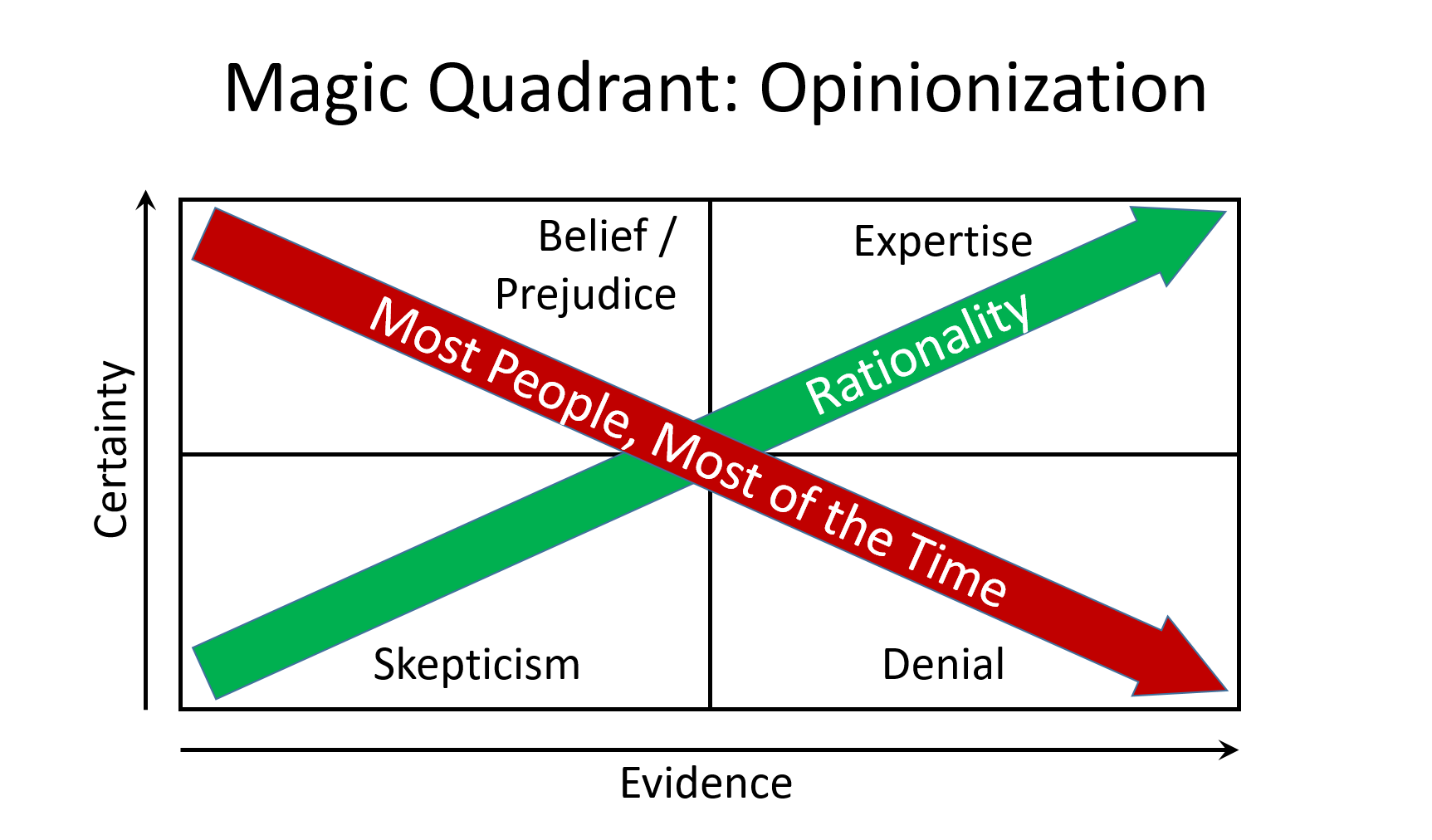

While this might not be the best example, quadrant charts can be useful for visualizing how a bunch of stuff compares. Take, for example, my new Opinionization Quadrant. It visualizes the different types of thinking you and I run across all the time … and, if we’re honest with each other, the ones we ourselves engage in as well.

It’s all about evidence and certainty. No matter the subject, more and better evidence is what defines expertise and should be the source of confident opinion.

Less and worse evidence should lead to skepticism, along with a desire to obtain more and better evidence unless apathy prevails.

When more and better evidence doesn’t overcome skepticism, that’s just as bad as prejudice and as unfounded as belief. It’s where denial happens — in the face of overwhelming evidence someone is unwilling to change their position on a subject.

Rationality happens when knowledge and certainty positively correlate. Except there’s so much known about so many subjects that, with the possible exception of Professor Irwin Corey (the world’s foremost authority), we should all be completely skeptical about just about everything.

So we need to allow for once-removed evidence — reporting about those subjects we lack the time or, in some cases genius to become experts in ourselves.

No question, once-removed evidence — journalism, to give it a name — does have a few pitfalls.

The first happens when we … okay, I start my quest for an opinion in the Belief/Prejudice quadrant. My self-knowledge extends to knowing I’m too ignorant about the subject to have a strongly held opinion, but not to acknowledging to myself that my strongly held opinion might be wrong.

And so off I go, energetically Googling for ammunition rather than illumination. This being the age of the Internet and all, someone will have written exactly what I want to read, convincingly enough to stay within the boundaries set by my confirmation bias.

This isn’t, of course, actual journalism but it can look a lot like it to the unwary.

The second need for care is understanding the nature and limits of reportage.

Start here: Journalism is a profession. Journalists have to learn their trade. And like most professions it’s an affinity group. Members in good standing care about the respect and approval of other members in good standing.

So when it comes to reporting on, say, social or political matters, a professional reporter might have liberal or conservative inclinations, but are less likely to root their reporting in their political affinity than you or I would be.

Their affinity, when reporting, is to their profession, not to where they sit on the political spectrum. Given a choice between supporting politicians they agree with and publishing an exclusive story damaging to those same politicians, they’ll go with the scoop every time.

IT journalism isn’t all that different, except that instead of being accused of liberal or conservative bias, IT writers are accused of being Apple, or Microsoft, (or Oracle, or open source) fanbodies.

Also: As with political writing, there’s a difference between professional reporters and opinionators. In both politics and tech, opinionators are much more likely to be aligned to one camp or another than reporters. Me too, although I try to keep a grip on it.

And in tech publishing the line separating reporting and opinion isn’t as bright and clear as with political reporting. It can’t be. With tech, true expertise often requires deep knowledge of a specific product line, so affinity bias is hard to avoid. Also, many of us who write in the tech field aren’t degreed journalists. We’re pretty good writers who know the territory, so our journalistic affinity is more limited.

There’s also tech pseudojournalism, where those who are reporting and opinionating (and, for that matter, quadrant-izing) work for firms that receive significant sums from those being reported on.

As Groucho said so long ago, “Love goes out the door when money comes innuendo.”